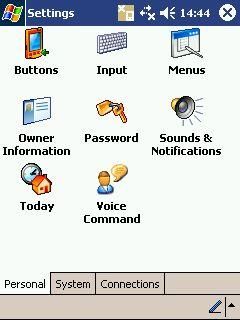

Most of you already know that I began my digital life as a Newton guy. To this day my MessagePad 2000U could do things that even the latest smartphones running the most customized ROM still can’t do. When it was later rolled back into Apple then killed off, I switched to Pocket PC. It was hobbled together and didn’t come close to how well integrated Newton was. Not until Voice Command came along. Instead of a feature, MS Voice Command was a purchased app that you could install on your PDA. This had advantages and disadvantages, but brought with it a whole new way of interacting with your device. It finally made the “P” in “PDA” representative of the “Personal” touch that was otherwise missing.

It wasn’t close to what Siri, OK Google, or even Cortana are today — but it was also much more, as confusing as that may sound.

OK Google, Siri, and Cortana are all voice-activated assistants. They help you do things without using your fingers — but they’re fairly limited, and they all require you to launch them. In essence they are a separate app that, once invoked, can listen to what you’re asking, but you’ve got to make the move to switch out of whatever app you’re in and change to another before you can ask your question or tell your smartphone or tablet what you want it to do.

Microsoft’s Voice Command was different. Although it wasn’t “always listening”, it was one button away almost all of the time. That’s the direction we need to be heading.

OK Google

I used to refer to “OK Google” as “Google Now”. I don’t any more. Sure, the two are very tightly coupled, but as voice features move further from the Google Now screen, and become more tightly coupled with the Android operating system — and eventually third-party apps — the “OK Google” keyword and associated functionality will become its own “thing”. As it should.

This falls right in line with the rumored “OK Google” Everywhere feature that we talked about last week, which may bring “OK Google” listening to every corner of your device.

Combine this with the fact that we’re starting to see hardware that enables an “always listening” mode that doesn’t kill your battery. The Moto X is the best example of this today, but modern Snapdragon processors include this functionality as well, even if it’s not being taken advantage of by anyone other than custom ROMmers and the like.

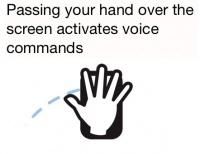

However, the custom “always listening” hardware doesn’t even need to be ubiquitous to take advantage of certain “almost” always listening scenarios. For example, when driving in your car, you might want to report a road hazard to warn other drivers. If you’re using your phone or tablet as a GPS, the screen is probably on, and the device could be waiting for your command. Using a speech-aware app, you could say a hotword followed by the report, all without taking your hands off the wheel or your eyes off the road.

While in that app you could ask your Android to change playlists if the music you’re listening to is getting stale. The OS could “hear” this and take the appropriate action, even though the app you’re in doesn’t know anything about what music player you prefer.

If you remember something along the road, a simple “Hey Google, remind me to pick up milk on the way home from work tonight” could not only be turned into a reminder, but thanks to geo-fencing and location awareness, your phone could even set a waypoint to ensure that you stop by the market on the way.

To get to this point, we need OS-wide voice awareness and listening all the time, especially when the screen is on. This level of integration can only carry us so far. Ultimately we need apps that are ready not only to listen to what we have to say, but are capable of responding in a uniform and consistent way. Apps can’t do this on their own, well, they can, but they shouldn’t. Everything needs to work the same, and needs to work together to prevent confusion.

I guess that’s almost hands-free.

Most importantly, this solution, whatever form it takes, needs to be intelligent enough to respond to requests as we make them, rather than depending on us to learn a predefined set of commands to make it work. Common-language, slang, and fuzzy logic are all going to play major parts.

The race is on. It’s up to the three powerhouses of Apple, Google, and Microsoft to see who can come up with the first personal digital assistant that is truly personal.

Your turn

What do you wish voice commands could do for you? How do you think voice assistants should work? What are the major pitfalls that need to be avoided? What do you think the perfect implementation of this technology will be? How much longer do you think it will be before we get there?

We’re anxious to hear what you have to say! Head down to the comments and let us know your thoughts!