Google’s Search On live event was quite a busy one. The company introduced a slew of cool new features such as ‘hum to search’ for discovering songs and meaningful upgrades for Google Lens, Search, and Maps. A majority of the features announced by Google somehow touch upon the aspects of life that have been affected by the coronavirus pandemic, and sound really helpful. So, let’s quickly recap the most important ones:

Google Maps will keep you safe and prepared

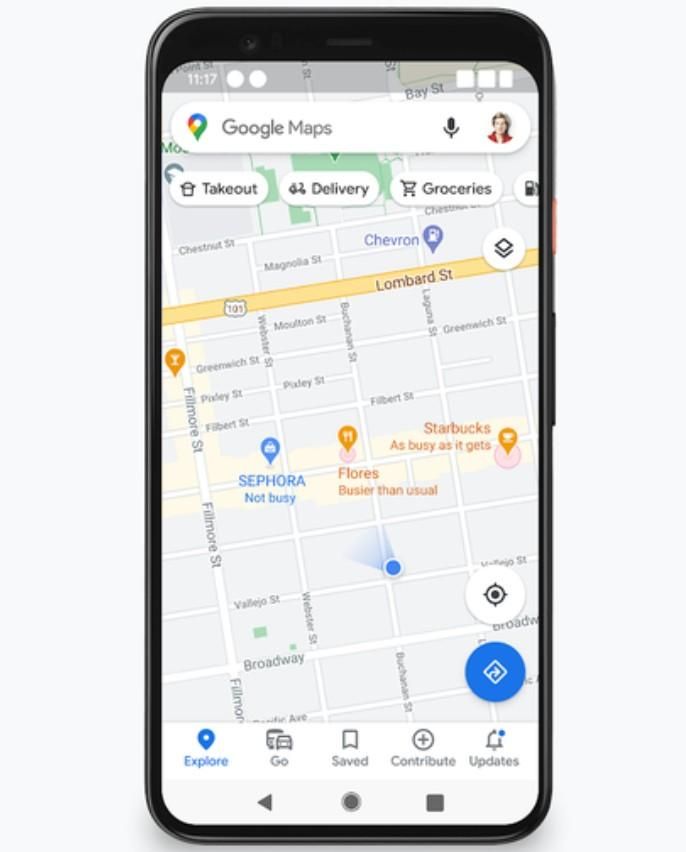

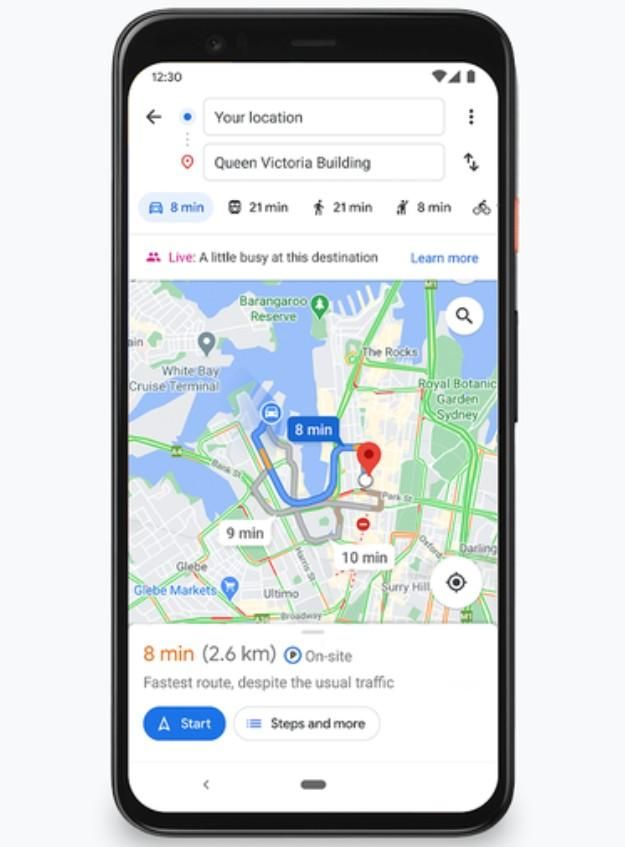

The ‘busyness’ feature on Google Maps shows how busy a place is at a given time or day of the week, helping users accordingly plan their visit to avoid crowded space, something that is of vital importance in the battle against a deadly pandemic. Google says it will increase the coverage of live busyness information to more areas such as beaches, pharmacies, and grocery stores among others, while also expanding its reach by five times.

Plus, busyness information will now be shown directly on Google Maps without even searching for a place, and while on the move as well. This feature will soon be available on Android, iOS, and desktop. In addition to real-time busyness information, users can also see a graph of how busy a place usually is over the course of a week.

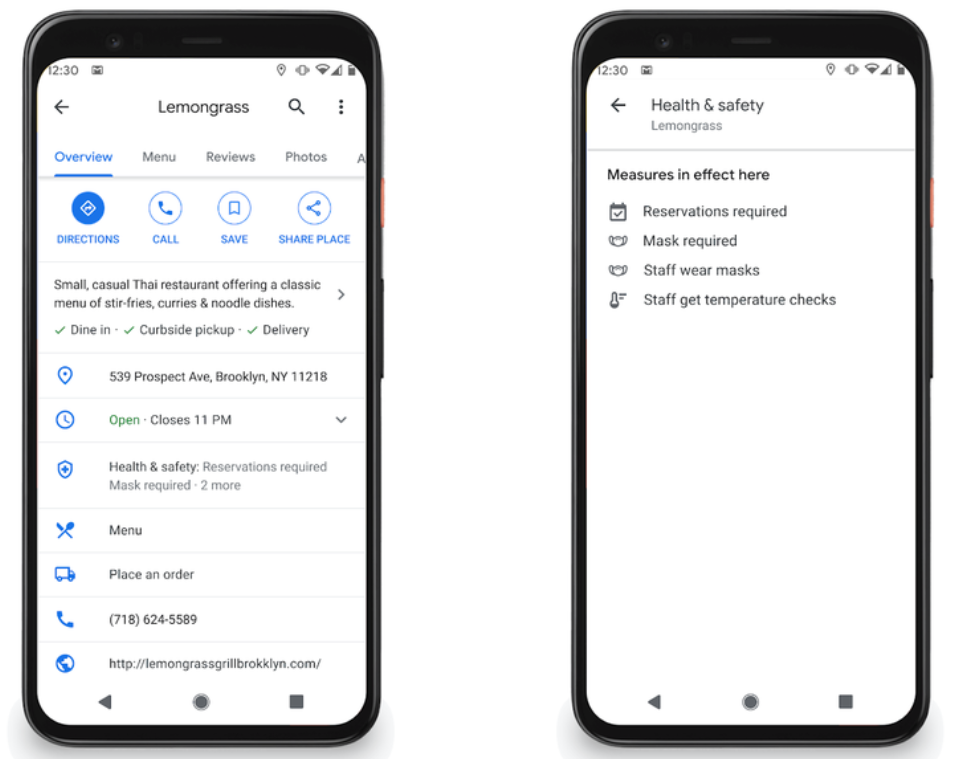

Additionally, Google Maps will also show information about the health and safety precautions that are undertaken at a restaurant or shop. This information is contributed by businesses listed on Google Maps, but users will soon be able to add their personal experiences as well.

Lastly, users across the world will soon be able to use the Live View augmented reality feature to find more information about a place, such as when it opens, the busyness status, its star rating, and the safety measures it has put in place. All you have to do is just open Live View, point to a shop or building, and tap on the icon above it.

A smarter search experience

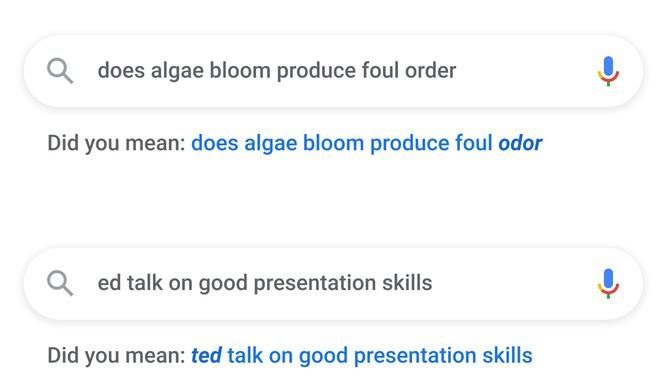

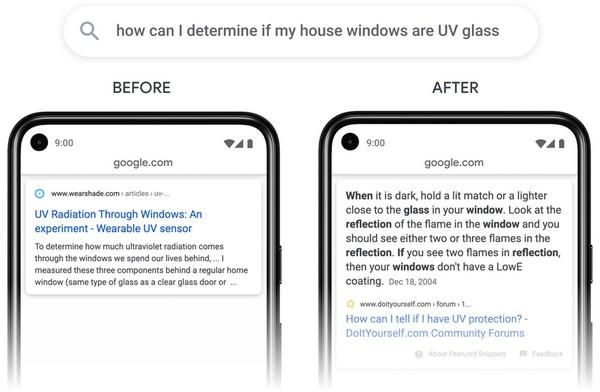

Google has announced that BERT language understanding is now used to process all search queries made in the English language. Plus, Google search now relies on a new spelling algorithm that can detect grammar and spelling errors more efficiently. As a result, it can find the right search results users are looking for.

Google search is also making it easier for users to find answers to questions that require some explanation. To do so, Google search now indexes individual passages on a webpage too, in addition to the webpage itself. Doing so will make it easier for the search algorithms to understand the relevancy of each passage and accordingly bring up results that can answer users’ queries.

Google has also started testing a new technology that will help users quickly find a particular moment or segment in a video they are looking for. AI algorithms will automatically recognize key moments in a video and will accordingly tag them, somewhat like chapters in YouTube videos. For example, a baseball match video will be labeled with time markers for moments such as home run and strikeout.

Google Lens is now even better

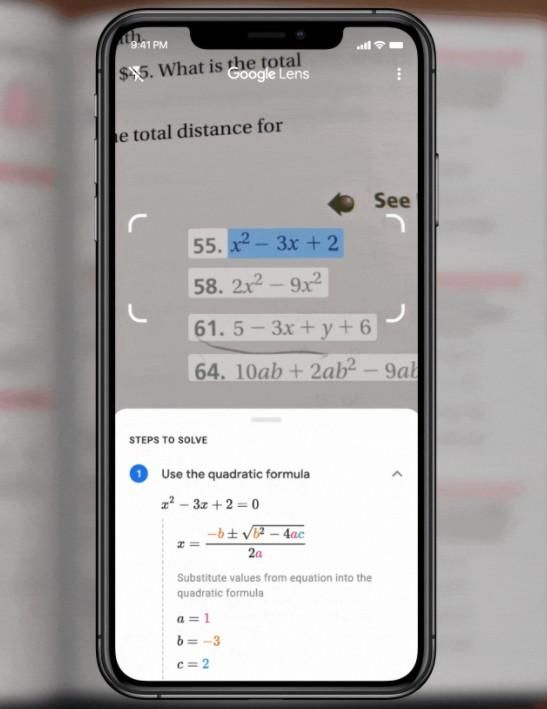

Google Lens is already capable of doing a lot of cool things such as recognizing objects, extracting text from photos, identifying codes, and a lot more. It is now getting even better, especially when it comes to education. Google Lens can now identify mathematics or science problems, and will accordingly show step-by-step solutions and guides to help students. This capability can be accessed from the Google app’s search bar on Android and iOS.

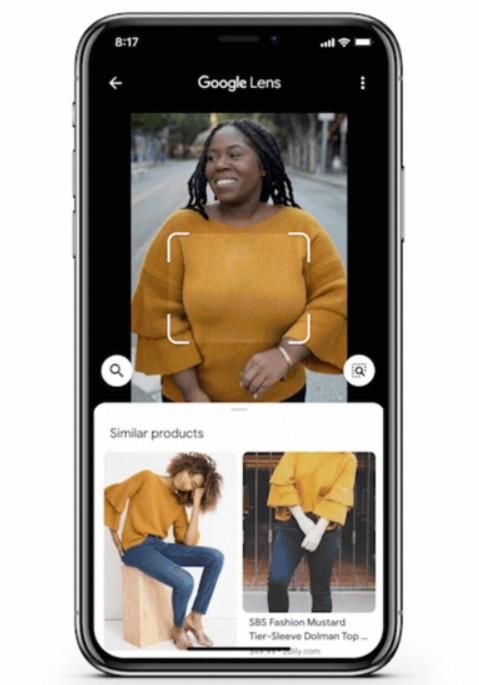

Another cool trick that is coming to Google Lens is an easier shopping experience, thanks to Style Engine technology. Now, when users long-press on an image while viewing it in the Google app or Chrome browser on Android (coming soon to the Google app on iOS too), Google Lens will show matching items listed on e-commerce platforms so that users can easily find more information or purchase them.